Mind Reading Technology, Using AI is Around the Corner?

The semantic decoder is like a super-smart helper that understands not just what we're saying but also what we're thinking.

In the ever-evolving landscape of artificial intelligence, the concept of mind reading has transcended the realm of science fiction and become a tangible reality. Recent breakthroughs, particularly by the University of Texas at Austin and Meta, have propelled us into an era where AI can decode and interpret human thoughts. This blog will delve into the groundbreaking advancements in mind-reading technology, the ethical implications it poses, and its potential impact on various aspects of our lives.

The Pioneering Minds

At the University of Texas at Austin, smart folks like Jerry Tang and Alexander Huth made a cool gadget that can read minds without being all invasive. It's like a language decoder, but for the brain! This clever team created a device that takes what's happening in your brain and turns it into words you can understand. They used something called fMRI, like a brain camera, to catch what's going on inside your head. With this cool technology, they're not invading your brain, just decoding the language it speaks, which is super neat!

Imagine if your thoughts could turn into words without you saying them out loud. Well, that's what these brainy minds at the University of Texas made happen! Jerry Tang and Alexander Huth led a team that created a non-invasive language decoder. They used the brain camera (fMRI) to capture the brain's activity and turned it into words. This invention could be a game-changer for how we communicate, making it easier for people who can't talk to express themselves.

The Non-Invasive Language Decoder

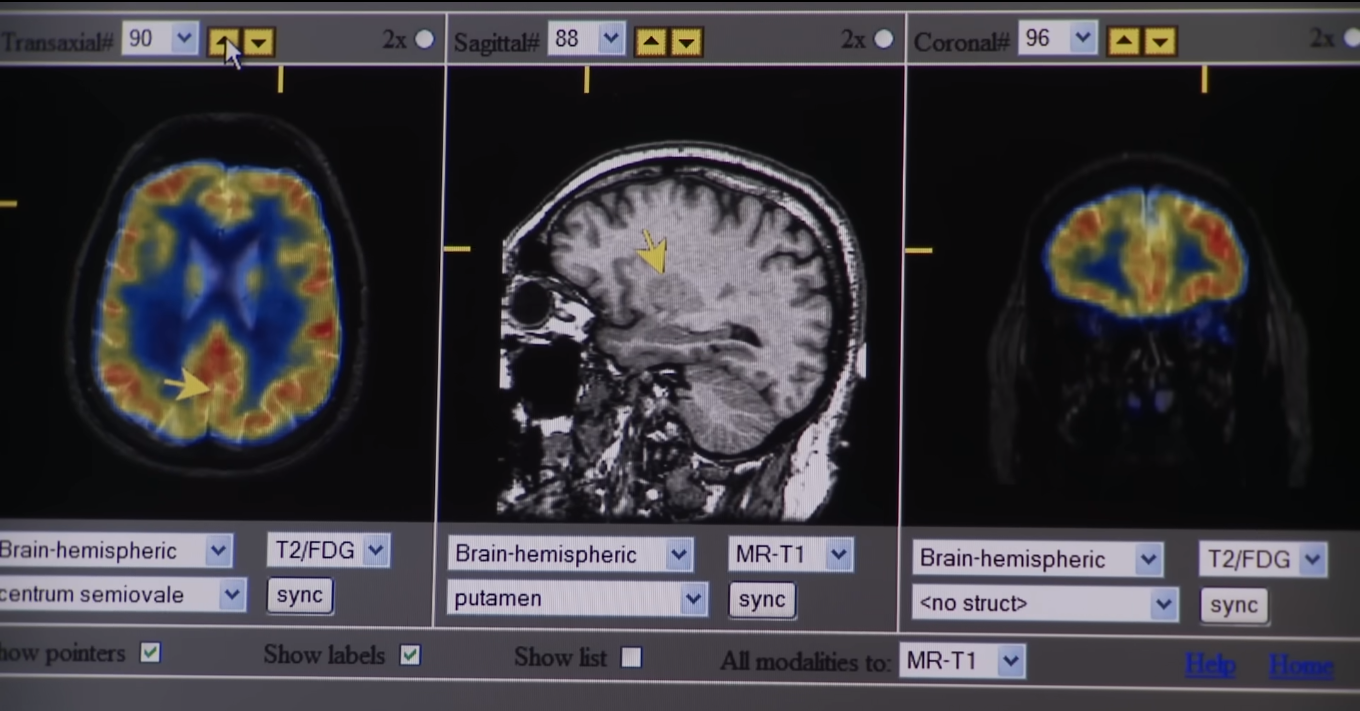

The device itself represents a significant leap in decoding brain activity, marking a departure from earlier models. Its integration with generative AI is a key aspect, enabling the reconstruction of continuous language from both perceived and imagined speech, as well as silent videos. This approach surpasses the limitations of previous models, which often recognized only isolated words or phrases.

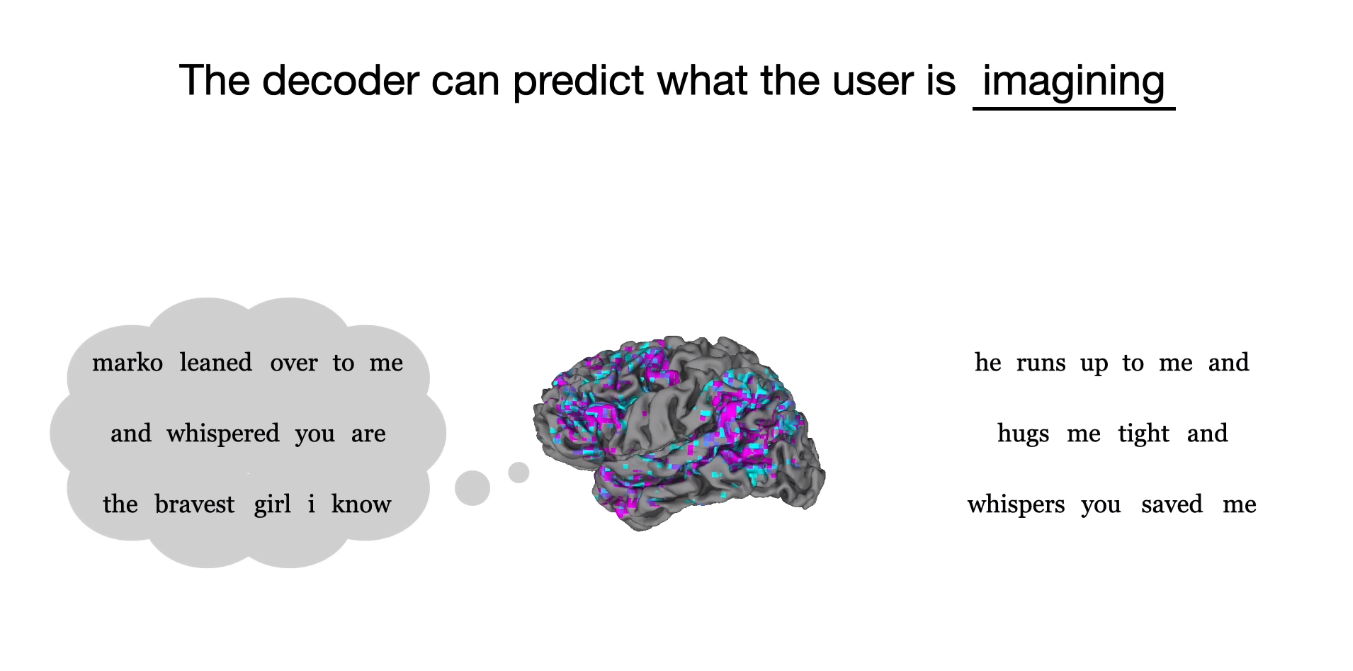

The Semantic Decoder/Decoding Natural Language

The semantic decoder is like a super-smart helper that understands not just what we're saying but also what we're thinking. It's a big step forward in making sense of our thoughts! This cool decoder looks at recordings of our brain without needing anything invasive, like surgery. It's like having a brain camera, and this camera is called fMRI. Although it can't capture how fast our thoughts happen, it's really good at figuring out exactly where things are happening in our brains.

Even though the fMRI has a bit of a challenge with the timing of our thoughts, the smart researchers found a way to work around it. They used this fancy decoder to turn what's happening in our brains into words. So, if we're thinking about something or imagining it, this decoder can understand and tell the story in a way that sounds just like how we talk naturally.

The Intricacies of the Encoding Model and Generative AI

The encoding model plays a crucial role in predicting how the brain reacts to natural language. To refine the model, the researchers recorded brain responses during sessions where subjects listened to 16 hours of spoken narrative stories. The integration of GPT-1, a predecessor to ChatGPT models, into the decoding process adds a layer of language structure. The use of beam search, a clever algorithm, further refines the possibilities, allowing the decoder to consistently improve its predictions over time.

Testing and Results

Training decoders for individuals and evaluating comprehension were pivotal steps. The results were extraordinary, with decoded word sequences not only grasping the essence of information but frequently duplicating precise words and phrases. This demonstrates that semantic information can genuinely be derived from brain signals recorded by fMRI. Additionally, the exploration of how different brain regions redundantly encode language representations at the word level adds a fascinating layer to the research.

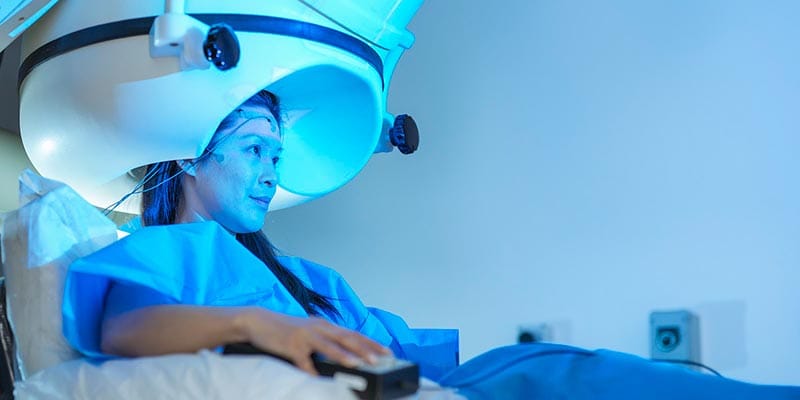

Meta's Revolutionary Leap into Mind Reading

Meta, a social media conglomerate, entered the mind-reading arena with an AI system that can analyze brain waves in real-time and predict visual focus. Departing from fMRI, Meta adopted magnetoencephalography (MEG) technology, capturing thousands of brain activity measurements per second. We'll explore the components of Meta's system, its training using self-supervised learning, and the synchronization observed between artificial neurons and the human brain.

MEG Technology and Real-Time Brain Activity Analysis

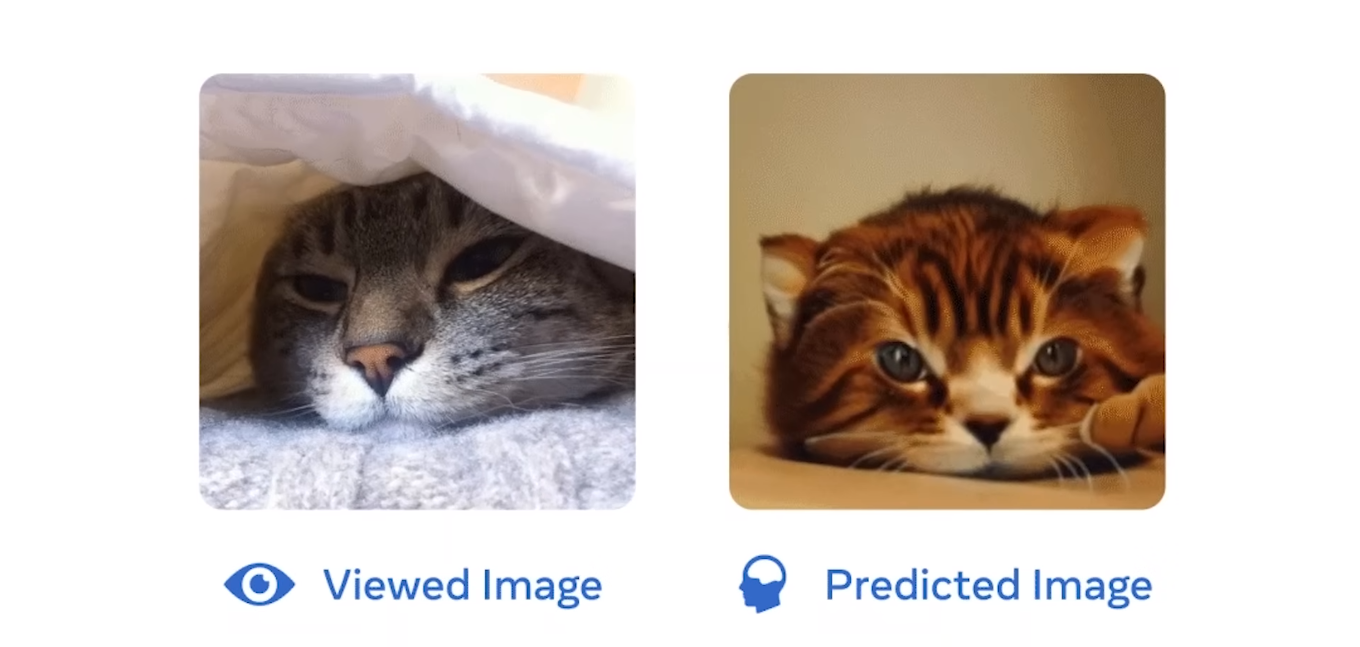

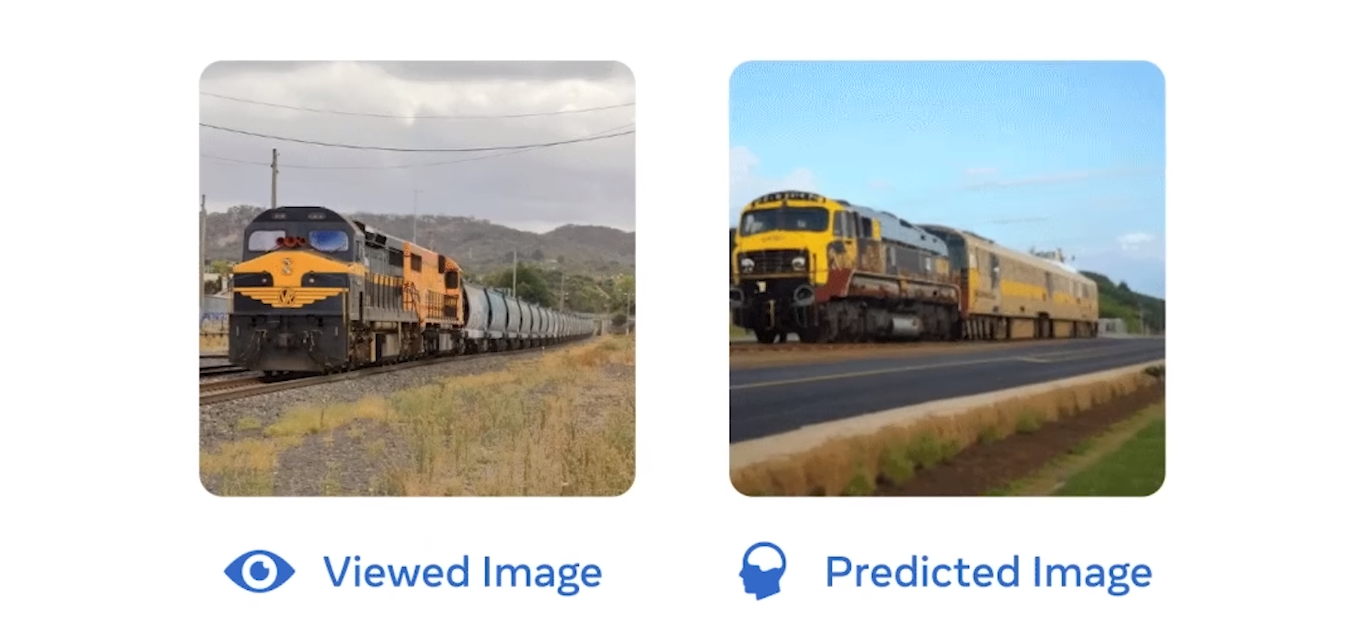

MEG, as a non-invasive neuroimaging technique, introduces a shift in capturing brain activity measurements. Meta's AI system for decoding visual representations in real-time is a significant step forward. The inclusion of three components - an image encoder, brain encoder, and image decoder - showcases the complexity of their approach and the ambition to decode the brain's visual focus.

Self-Supervised Learning with Dino V2

The use of self-supervised learning with Dino V2 is a notable aspect of Meta's research. Unlike traditional supervised learning, Dino V2 learned imagery autonomously without human labels. Meta asserts that this facilitates AI systems in acquiring brain-like representations. The functional synchronization observed between AI systems and the brain opens up new possibilities for real-time mind reading and interpretation.

Reading Minds in Real-Time

Meta's system's capabilities to reconstruct images perceived by the brain in real-time is a significant breakthrough. The potential applications and implications of real-time mind reading are vast, from medical diagnoses to personalized user experiences. Comparing Meta's approach with the University of Texas at Austin's method provides insights into the diverse strategies employed in advancing mind-reading technology.

For a comprehensive explanation, please refer to the video below:

In conclusion, The mind-boggling advancements in mind-reading technology present both awe-inspiring possibilities and ethical dilemmas. As we stand at the cusp of a new era in understanding the human brain, it is crucial to navigate the path forward with mindfulness, considering the potential impact on privacy, individual autonomy, and societal norms. The conversation around mind reading with AI is just beginning, and the exploration into its limitless possibilities is a journey that promises to reshape our understanding of the human mind.